EU AI Act: What does the new AI legislation mean for customer service?

With the EU AI Act, the European Union has adopted the world’s first comprehensive regulation for artificial intelligence (AI). But what does this mean for companies that use AI in customer service? Do existing systems need to be adapted? What new regulations are there – and what happens if they are ignored? In this article, we explain the most important points and show what companies should pay attention to now.

Why does the EU AI Act exist?

The EU AI Act is intended to ensure that artificial intelligence is used responsibly. The law takes a risk-based approach: different rules apply depending on how critical an AI application is. While high-risk systems (e.g. AI in the financial sector or in medicine) are subject to strict requirements, AI in customer service is primarily subject to transparency obligations.

What new rules apply to AI in customer service?

Companies that use AI-supported systems such as chatbots, AI agents or automated customer service processes must comply with certain requirements in future:

1. Transparency obligation: customers must know that they are interacting with an AI

A chatbot must not give the impression of being a human agent. This means that every AI-supported dialogue must be clearly labelled as such. Companies should therefore review their systems and ensure that bots and virtual assistants clearly identify themselves as AI.

2. AI-generated content must be recognisable

Replies generated by AI must not be sent to customers without being labelled. This applies, for example, to automatically generated emails, chat responses or documents. Companies should ensure that such content is labelled as AI-generated – for example by including a note in the message.

3. Emotion recognition and biometric analyses are severely limited

In future, tools that analyse emotions from speech or facial expressions will be subject to strict rules or banned altogether. Companies that use such technologies should check carefully whether they are still permitted.

4. "Human in the Loop": AI interaction must be transferable to a human at any time

Customers must not be stuck in an endless loop with an AI. They must be able to hand over the interaction to a human support agent at any time. Companies should therefore ensure that their systems allow for easy escalation to human support.

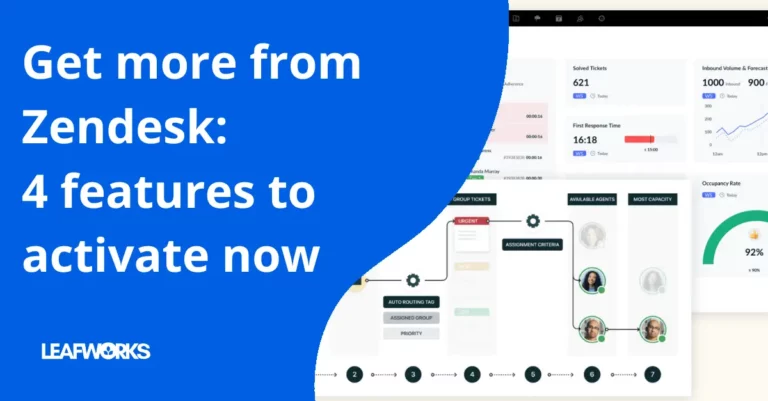

What does this mean for companies that use Zendesk?

Companies that use AI-supported solutions such as Zendesk AI Agents, automated responses or chatbots in particular should review their systems. Adjustments may be necessary to comply with the new requirements. These include

Labelling AI agents and chatbots: Clear indications that customers are talking to an AI.

Verification of automated responses: Ensure that generated content is recognisable as such.

Integration of escalation options: Incorporate simple forwarding to human agents.

Deadlines

The EU AI Act sets out clear guidelines for the use of artificial intelligence in Europe. Companies and organisations must be prepared for different implementation deadlines, depending on the type of AI systems concerned:

2 February 2025 (6 months after entry into force): AI systems that fall under the ban must be switched off within this period.

2 August 2025 (12 months after entry into force): General purpose AI (GPAI) systems must comply with the new regulations.

2 August 2026 (24 months after entry into force): High-risk AI systems, as defined in Annex III of the Act, must be compliant during this period.

2 August 2027 (36 months after entry into force): A longer implementation period applies for other high-risk AI systems listed in Annex I.

These deadlines provide companies with the necessary framework to adapt their systems and processes to the new regulatory requirements. Compliance with the regulations is crucial in order to avoid sanctions and ensure the legally compliant use of AI technologies in the EU.

What are the penalties for non-compliance?

The EU AI Act provides for severe penalties for violations: Companies that violate the regulations risk fines of up to €35 million or 7% of their annual global turnover. It is therefore worth reacting to the new requirements at an early stage.

Conclusion: companies should act now

The new requirements of the EU AI Act are not a dream of the future – the first deadline has already passed; companies that use AI in customer service should get to grips with the new rules now. Those who act proactively can not only avoid legal risks, but also strengthen the trust of their customers.

Leafworks helps companies to make Zendesk and other AI-supported customer service solutions legally compliant. Whether it’s customising outbound notifications for AI-generated text, optimising bot flows to ensure AI-driven conversations comply with regulatory requirements, or reviewing and adjusting agent handover settings to ensure the transition from AI to human agents is smooth and meets human-in-the-loop requirements. Talk to us about your processes!

Sources & further information

- Official EU AI Act text: EU legislation on the regulation of artificial intelligence

- EU Commission: Overview of the AI Act: Regulatory approaches for trustworthy AI

- Zendesk Trust Center: Secure Customer Service