1. Is ChatGPT worth it for companies?

Many organisations ask themselves: is this more of a gimmick or does it actually make strategic sense? The honest answer is: it depends. And it depends on three things: the specific use case, the available data – and the organisation’s readiness for change.

- Use case: Are there processes with high text volume, many repetitions or manual research work?

- Data access: Are structured or at least easily searchable resources available (e.g. FAQs, guidelines, CRM notes)?

- Maturity: Is the organisation ready to adapt processes – and not just introduce new tools?

Numbers that set the frame:

- According to an MIT x BCG study (2023), consultants report time savings of 40–50% on text-based tasks when using GPT-4.

- In Leafworks projects involving Zendesk automation, response times in first-level support improved by up to 25%.

- Entry costs are low: individual users start at €20/month, small integration projects from around €5,000.

Conclusion: ChatGPT is worth it if the implementation is tied to a clear objective. Without context, data and process adaptation, the impact often remains limited.

Learn more about our experience with AI in customer service →

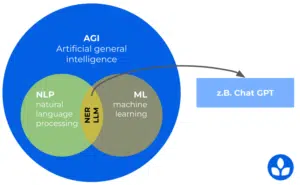

2. What ChatGPT is – and why it matters for companies

ChatGPT is based on a so-called Large Language Model (LLM), which learns from billions of text examples to recognise and reproduce language patterns. This allows ChatGPT to handle text-based tasks within seconds. In a business context, this is relevant to:

- create content faster and more consistently – in customer service, marketing or HR

- make knowledge accessible across locations – e.g. for onboarding, support or internal communication

- automate repetitive tasks without introducing new systems

3. What companies expect from ChatGPT – and how to actually achieve it

Many organisations start experimenting with ChatGPT, but the biggest effects occur where generative AI is integrated into existing systems and workflows – for example in CRM, service or knowledge processes. This is where solutions like the various Zendesk AI features come in, making generative functions useful in clearly defined contexts.

| Expectation of ChatGPT | What companies imagine | How AI is implemented in real business systems (examples) |

|---|---|---|

| faster results | Automatic replies, text variants, summaries | Generative AI in tools like Zendesk AI Copilot, office suites, knowledge management systems |

| better use of knowledge | GPT “knows everything” and finds everything | Systems with structured knowledge bases: Help Center, internal manuals, Confluence, CRM data |

| less routine | GPT handles standard texts and repetitive tasks | AI-based workflows: ticket classification, document summaries, HR/IT self-service, CRM automations |

| higher quality | GPT checks tone & content | AI-driven QA functions in tools like Zendesk QA, Grammarly Business, content guidelines |

| scale without hiring | GPT works in parallel | Productive AI Agents in systems like Zendesk AI Agents, workflow automation, chatbots |

| simpler onboarding | GPT explains processes | AI-supported assistance systems, step-by-step guides, automated ticket or document summaries |

| multilingual operations | GPT translates everything automatically | integrated language & intent detection: Language Detection, multilingual bots, translation add-ons |

| better analysis | GPT identifies trends in text | AI analytics: sentiment analysis, pattern detection, volume forecasting, workforce forecasting |

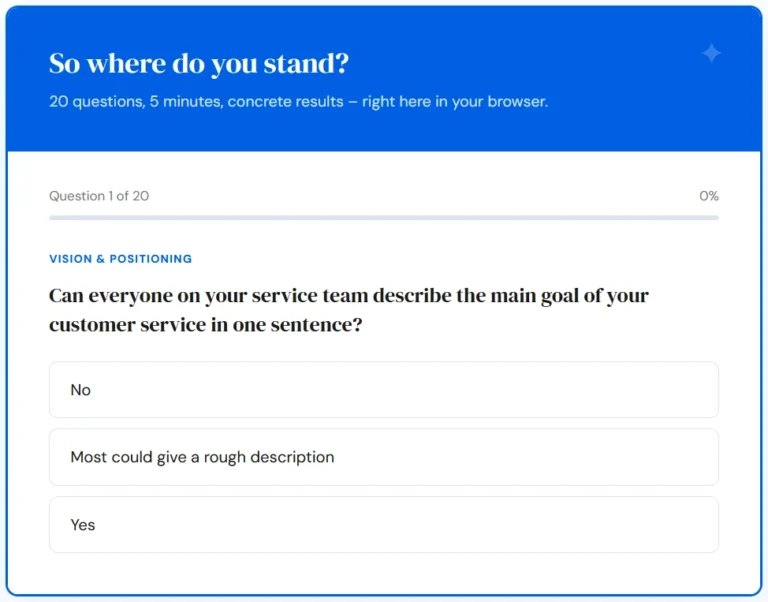

4. Entry scenarios and costs

The path to productive AI use is highly individual. Some teams start with simple text automation, others integrate large language models directly into existing systems. Three entry models have proven successful in practice:

1. Direct use (individuals + small teams)

Tools such as chat.openai.com, ChatGPT, Microsoft Copilot or Notion AI enable quick experiments – ideal for testing initial effects or speeding up internal tasks.

Typical use cases:

-

- text variants, summaries

- research support

- ideation for marketing and HR

Costs: €20–50/month per user

Limitation: No business context, no control over data flows, no process integration.

2. Integration into existing systems

Here, AI functions are embedded directly into the tools teams use every day. This is usually where experiments turn into real productivity.

Examples:

-

- generative functions in CRM and support systems

- AI-based classification and summarisation

- automated workflows (e.g. HR, IT or service processes)

A practical example: when companies use Zendesk, many rely on Zendesk AI features or AI apps from Knots – Copilot, AI Agents or AI analytics are integrated into the system, GDPR-compliant and role-based.

Cost range for integration projects: approx. €5,000–15,000 depending on system landscape, interfaces, roles and use cases

3. Custom AI solutions / Custom GPTs

Organisations with highly specific requirements or strict data sovereignty develop their own GPT applications or LLM-based services.

Typical motivations:

-

- internal knowledge models

- domain-specific assistants

- complex automation logic

Note: higher integration or security requirements

Costs: from approx. €20,000 (incl. API integration, data preparation, prompt engineering, monitoring)

5. Prerequisites for successful AI adoption

AI only creates real value when the fundamentals are in place. Many companies do not fail because of the technology, but because of missing foundations: data, structure, ownership or clear processes. Four prerequisites have proven essential:

1. Reliable knowledge sources

LLMs are only as good as the context they receive. Companies need well-structured or at least easily accessible content – e.g.:

-

- FAQs, internal guidelines, product and process documentation

- CRM notes or customer histories

- knowledge bases, Confluence spaces, Help Centers

If this foundation is missing, the content structure must be improved first – otherwise results remain unreliable.

2. Clarity on data flows & data protection

Before AI is used in an organisation, fundamental questions must be answered:

-

- Which data may be processed – and which may not?

- Who has access?

- How are outputs reviewed, approved and documented?

- Which roles are allowed to use AI functions?

Without governance, shadow AI emerges. Tools such as Zendesk, Microsoft, Atlassian or dedicated LLM environments (e.g. Azure OpenAI) provide controllable frameworks.

3. Context competence instead of just prompting

Prompting is important, but in a business context it is far from the most relevant skill. What matters is how well teams can:

-

- clearly define problems

- provide the necessary context

- review and refine results

- use AI as part of existing workflows

Prompting is therefore not “trick knowledge” but part of a productive dialogue between humans, systems and models.

4. Process and organisational maturity

AI can only improve what already works. Without defined processes, roles and responsibilities, AI amplifies chaos instead of solving it.

Mature organisations are characterised by:

-

- consistent processes (support, HR, IT, sales, marketing)

- clear escalation paths

- reliable data quality

- documented workflows

- technical integration readiness

Especially in systems like Zendesk, Microsoft or ERP environments, the pattern is clear: AI has the strongest impact where processes are well-defined – not where the model is expected to “work magic”.

In short:

The use of ChatGPT & co. is not primarily a technical question, but a question of data, structure, processes and organisation. Those who build the foundations achieve measurable benefits quickly. Those who don’t rarely move beyond experimentation.

Excursus: what good prompts are really made of

Prompting is a dialogue, not a one-way instruction. Good prompts are based on:

- role awareness: who is speaking – a product manager, an agent, a bot?

- goal orientation: what should be produced – information, action, suggestion?

- context depth: for whom, on which channel, with what level of knowledge?

- feedback capability: good results require iteration – and follow-up questions.

- proven patterns: shortcuts like “TL;DR”, “ELI5” or “Humanize” can speed up the workflow.

Prompting can be standardised, documented and shared across teams – for example through templates or an internal prompt library.

6. Risks & limitations

Generative AI creates significant potential – but also introduces risks that organisations must consciously manage. Four aspects are particularly relevant in practice:

1. Hallucinations & unreliable output

LLMs generate content even when facts are missing or incorrect. Even strong prompts cannot prevent models from producing plausible but wrong answers. Every output therefore requires review, context and clear usage rules.

2. Lack of transparency (“black box”)

Why a model arrives at a certain answer is often not traceable. This is not a technical bug but a core architectural feature of LLMs. Organisations need guidelines that define when AI provides suggestions – and when humans must make decisions.

3. Data protection & shadow AI

Without clear guidance, unsafe practices emerge quickly: private accounts, copy-pasting sensitive content or uncontrolled sharing. Secure environments (e.g. Azure OpenAI, controlled integrations, role models) are essential before AI is widely adopted.

4. Overestimated impact

Many start using AI without clear goals, data or process understanding. The result: no noticeable improvement – sometimes even more effort. The highest ROI occurs where AI is embedded into existing systems and processes, not in isolated experiments.

7. Conclusion: what ChatGPT can deliver – and when expert support makes sense

ChatGPT is no longer a hype topic but a tool that can provide real advantages – provided the foundations are in place. Teams that approach AI systematically and integrate it meaningfully into their workflows achieve measurable effects: faster results, more consistent content, less routine work and better knowledge utilisation.

The key lies not in the model itself, but in:

- clear use cases

- clean data

- the right systems

- good processes

- and realistic expectations

This is exactly where we come in. Leafworks supports you in:

- finding the right starting point – whether small, integrated or strategic

- embedding AI into existing systems (e.g. Zendesk, internal tools, CRM)

- building AI competence within your team – including prompting & contextual work

- making data and workflows usable in a GDPR-compliant way

In short: we provide sparring, structure and experience – so AI doesn’t remain an experiment but creates real impact.